Combine existing security concepts and best practices together and design more secure distributed applications.

Introduction

A common approach to modernizing applications is to use APIs and decompose them into smaller units that typically live in containers. These approaches involve many concepts and technologies that are not always well understood, leading to poor application security postures. In addition, solution architects and developers who are creating the applications often lack the knowledge to select and apply the required security controls.

This two-part series brings together existing ideas, principles, and concepts such as end-to-end trust, authentication, authorization, and API gateways, to provide a high-level blueprint for modern API and microservices-based application security. To address security when using an API and microservices based architecture, read both parts of this series in order to:

- Gain a high-level understanding of modern API-, services-, and microservices-based application architectures.

- Become aware of key security concerns with these application architectures.

- Understand how to best secure application microservices and their APIs.

This series is for you if you are a solution architect, a software developer, or an application security professional who is faced with securing APIs and their microservices.

Prerequisites

Some exposure and previous knowledge of APIs and microservices-based architectures will help give you a better grasp of the security aspects discussed. However, it is not necessary.

Estimated time

Take about 30 to 45 minutes to read both parts of the series. Part 1 should take about 20 to 25 minutes.

What are services and microservices?

According to Wikipedia, “Microservices are a software development technique – a variant of the service-oriented architecture (SOA) architectural style that structures an application as a collection of loosely coupled services. In a microservices architecture, services are fine-grained, and the protocols are lightweight.”

For decades, monolithic applications were the order of the day. An application contained a set of several different services in order to deliver business functionality. The monolithic application’s services and functions had the following characteristics and challenges:

- They were tightly coupled together.

- They did not scale well (especially when different components had different resource requirements).

- Monolithic applications were often large and too complex to be understood by a lone developer.

- They slowed down development and deployment.

- They did not protect components from the impact of issues from other components.

- The applications were difficult to rewrite if you needed to adopt new frameworks.

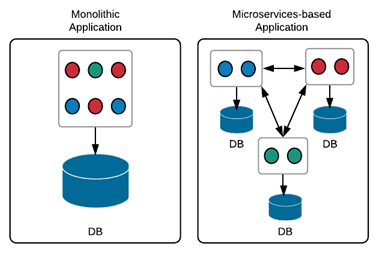

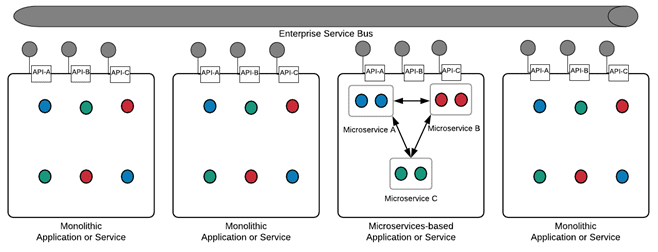

Consider the following illustration comparing the architectures of monolithic applications and microservices-based applications.

Figure 1: Comparison of monolithic and microservices-based application architectures

A movement began in the late 1990s that began to focus on service-oriented architecture (SOA). Companies, particularly larger enterprises, started analyzing systems deployed across their organizations, looking for redundant services within different application systems. They then created a unique set and exposed legacy systems as services that could be integrated to deliver applications through new or existing channels.

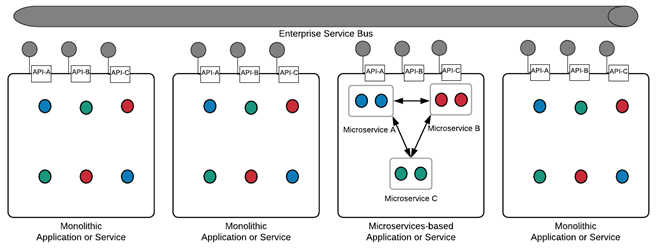

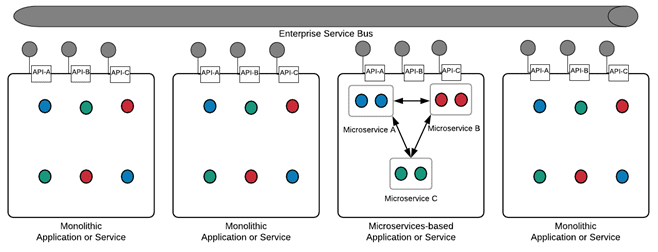

Part of this process involved decomposing existing application systems into a set of reusable modules and facilitating development of various applications by combining those services. Each service in turn contained several tightly coupled functions related to a particular business area exposed through application programming interfaces (APIs), most commonly as SOAP and XML-based web services that communicated over an enterprise message bus. The following illustration shows an enterprise service bus connecting SOA and microservices-based applications and services:

Figure 2: Enterprise service bus connecting SOA and microservices-based applications and services

The SOA movement was further propelled into popularity with the rise of web services in the early 2000s. (See the Service-Oriented Architecture Scenario article published by Gartner in 2003.)

Over the past decade, another movement started that further breaks these service “monoliths” into yet smaller microservices by decoupling the tightly coupled functions of each service. Each microservice typically resides in its own container and focuses on a specific functional area that was previously provided by the service and tightly coupled with other functions. The individual microservices typically have their own datastore to provide further independence from other components of the application system. However, data consistency needs to be maintained across the system, which brings new challenges.

APIs are still used to expose the functionalities provided by microservices. However, lighter protocols and formats such as REST and JSON are used instead of the heavier SOAP, XML, and web services. This further decomposition does not necessarily lead to additional APIs being exposed to service consumers, if the set of functions provided by the service or application that is decomposed remain the same. In addition, some microservices might only provide internal application functionality to the other microservices that are part of a given application group of APIs. They might not expose any APIs for consumption by other applications or services.

Microservices offer a few advantages over monolithic architectures and lead to more flexible and independently deployable systems. In the past, when several developers worked on different functions that made up an (often) large service or application, an issue with one function prevented successful compilation and roll-out of the entire service or application. By decoupling those functions into microservices, the dependency on other functions of the application system is removed. Issues with development and roll-out in one area no longer affect the others. This approach also lends itself well to DevOps and agile models, shifting from a need to design of the entire service at one time and allowing for continuous design and deployment.

While microservices address the issues related to monolithic applications described earlier, this architecture brings about a new set of challenges. Examples include the complexities of distributed systems development (such as inter-service communication failure and requests involving multiple services), deployment, and increased memory consumption (due to added overhead of runtimes and containers).

What about APIs?

Let’s visit Wikipedia again to understand an application programming interface or an API: “In general terms, it is a set of clearly defined methods of communication among various components. A good API makes it easier to develop a computer program by providing all the building blocks, which are then put together by the programmer.”

APIs are a set of clearly defined communication methods between software components. APIs have been around for quite a while and have made it easy for independent teams to build software components that can work together without knowledge of the inner working of others. Each component only needs to know what functions are exposed as APIs by the other component it wants to interact with. This architecture creates a nice separation layer and promotes modularity, where each component in a system can focus on a certain set of functionalities and expose the useful features to the outside world for consumption while hiding the complexities inside, as shown in the following illustration:

The focus of this article is on modern web APIs provided by many of today’s web-enabled applications. RESTful APIs, or those that conform to Representational State Transfer (REST) constraints are the most common. These APIs communicate using a set of HTTP messages, typically using JavaScript Object Notation (JSON), or in some cases Extensible Markup Language (XML), to define the structure of the message. Additionally, the shift in recent years to use REST style web resources and resource-oriented architecture (ROA), gave rise to the popularity of RESTful APIs.

The API gateway and the post-monolithic world

In the world of monolithic applications, all functions reside in the same walled garden, protected by a single point of entry, which is typically the login functionality. After the end-user authenticates and passes this point, access is provided the application and all of its functionality without further authentication. This is due to an architecture where all functions are tightly coupled inside a trust zone and cannot be invoked by outsiders to the application. In this scenario, each function inside the application can be designed to either perform further authorization (AuthZ) checks or not, depending on the requirements and granularity of entitlements scheme.

When you break these monoliths into smaller components such as microservices, you no longer have the single point of entry that you had with the monolithic application. Now each microservice can be accessed independently through its own API, and it needs a mechanism to ensure that a given request is authenticated and authorized to access to the set of functions requested.

However, if each microservice performs this authentication individually, the full set of end-user’s credentials is required each time, increasing the likelihood of exposure of long-term credentials and reducing usability. In addition, each microservice is required to enforce the security policies that are applicable across all functions of the application that the microservice belongs to, such as JSON threat protection for a Node.js application.

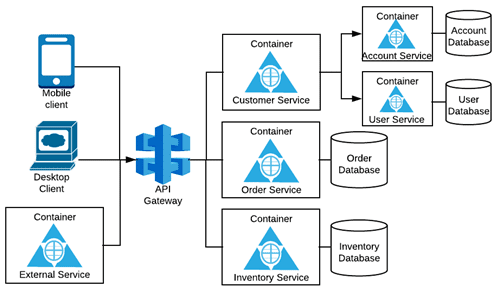

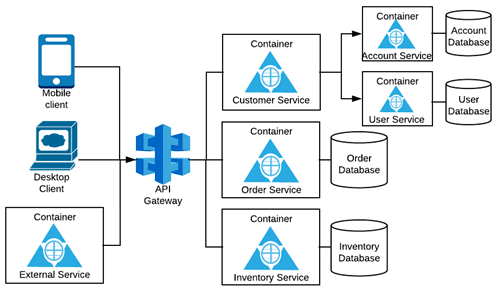

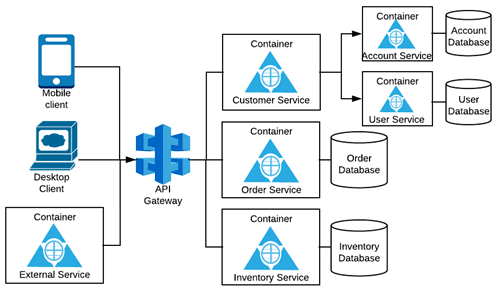

This where the API Gateway comes in, acting as a central enforcement point for various security policies including end-user authentication and authorization. Consider the following diagram:

After the end-users go through a “heavy” verification process involving their full set of long-term credentials such as user name, password, and two-factor authentication, a security token is issued that can be used for “light” authentication and further interaction with downstream APIs from the API gateway. This process minimizes exposure of the users’ long-term credentials to the system components, because they are only used once and exchanged for a token that has a shorter lifespan.

The API gateway can use a security token service along with an Identity and Access Manager (IAM) to handle the verification of end-user identity and issuance of security tokens, among other security token-related activities such as token exchange (more on this later). The API gateway acts as a guard, restricting access to the microservices APIs by ensuring that a valid security token is present and that all policies are met before granting access downstream, creating a virtual walled garden.

In addition to the previously described security benefits, the API gateway has the following non-security features:

- It can expose different APIs for each client.

- The gateway routes requests from different channels (for example desktop vs. mobile) to the appropriate microservice for that channel.

- It allows for the creation of “mash-ups” using multiple microservices that may be too granular to access individually.

- The API gateway abstracts service instances and their location.

- It hides service partitioning from clients (which can change over time).

- It provides protocol translation.

The Importance of user-level security context and end-to-end trust

In many multi-tiered systems, the end-users authenticate through an agent (such as a browser or a mobile app) to an external-facing service endpoint. The other downstream components in turn enforce mutual authentication either by using service accounts over Transport Layer Security (TLS), or mutual TLS, to establish service-level trust.

A key problem with this design is that the service provider gives access to all data provided by the set of functions that the service account is permitted to use. It does not consider the authenticated end-user’s security context. This approach is too permissive and is against the principle of least privilege.

For example, in a system where user accounts details are provided by a user account service, the service consumer could ask for and gain access to any user’s account details by simply authenticating to the service provider. An attacker with an agent capable of connecting to a service provider (using a compromised service account credentials or through a compromised consumer that has established service trust with the provider) can connect and access data belonging to any user. Another problem with this design is does not allow for comprehensive auditing of actions.

These problems highlight the importance of establishing an authenticated end-user’s security context before allowing access to data provided by a service. An security token should be required by the service provider to determine the user’s security context before servicing the request. These tokens must be signed so the authenticity of the issuer and integrity of the token data can be verified. A token should also be encrypted if there is a confidentiality concern related to data in the token (for example, account numbers).

In addition, an API invocation may involve calls across several microservices downstream from the API gateway. User-level End-to-end (E2E) trust is about communicating the authenticated end-user’s security context to all involved parties across the entire journey and allowing each party to take appropriate action. As noted at the start of this section, the user’s security context often ends at the external end-point in traditional application architectures, and all downstream components rely on service level trust instead. There are two ways that user-level E2E trust can be established across all microservices belonging to a given API group or application. One involves using a token-exchange service, and the other relies on E2E trust tokens.

When using a token-exchange service to establish user-level E2E trust, the application services can use it to exchange a given security token with another one that has the required scope and protocol for the next service in the call chain. The token exchange service ensures that the new token has the appropriate scope and protocol required by the downstream service. This limits the amount of access granted, and allows for a system of heterogenous protocols to be used by the different microservices of an application, for example exchanging a JWT token in return for a SAML token. Depending on the architecture, the security token and token-exchange services may be provided by the same entity, particularly if all calls go through the API gateway. Each microservice verifies the authenticity of the security token received and enforces further authZ if required based on the user’s security context, claims, and scope provided. More details about authZ are discussed in the second part of this article.

Another way to achieve E2E trust across entire journey is to use a single security token as the E2E trust token, issued at the gateway by the security token service. The gateway performs the security policy checks required by the application or API group as before, then provides an E2E trust token for downstream consumption. In this scenario, all microservices involved must have the same scope, or the scope enforcement must be handled by each one independently. In addition, all microservices must support the same protocol. Upon receiving the E2E trust token, the receiving microservice verifies the signature (and performs decryption if required), performs authZ, and processes the request based on the claims and scope provided. If there are other microservices in the journey, the token is passed down and the same verification and authZ process takes place at each downstream microservice. While this model removes the round trip to the token-exchange service for each call across the journey, it does not provide the same level of flexibility and access control as using a token-exchange service.

With both of these models, user-level E2E trust is established among microservices belonging to the same application trust zone requiring the enforcement of the same set of security policies and using the same Identity provider (IdP). If access is required to microservices in another trust zone and with a different IdP, it must go through the gateway where the API for that microservice is presented to enforce the required set of security policies for that trust zone. Security tokens issued for one application should not be accepted by microservices in another.

While using a security token downstream from the API gateway provides user-level trust based on the authenticated user’s security context, this do not remove the need to eliminate service-level trust. Security should be applied at all layers and follow the defence-in-depth model. Lack of service-level trust could lead to use of a compromised token (or one that is generated by an attacker and signed using a compromised key) by agents that are not authorized to access microservice components that make up a specific application. Modern API systems allow the use of secure overlay networking to make it easier to establish service-level trust than by using service accounts or mutual TLS between the microservice components involved.

Summary

Today’s modern service-oriented applications are often decomposed into microservices exposed by APIs. It is important to understand what microservices and APIs are and the role they play in the application system. When adopting an architecture based on APIs and microservices, use API gateways where possible to provide a single point of security policy enforcement, and establish end-to-end user-level trust on top of service-level trust to ensure security is applied at multiple layers.

In Part 2 you will learn why authZ may be needed, which protocols can be used for authN and authZ, what to do when an API is invoked by applications and services outside its trust boundary, additional security policies to consider beyond authN and authZ (for example JSON threat protection and rate-limiting), logging and monitoring considerations, as well as how group policies can help build a more secure API and microservices-based application.

Related Links and Further Reading

“Service-Oriented Architecture Scenario” by Yefim Natis (Gartner):

https://www.gartner.com/doc/391595/serviceoriented-architecture-scenario

“Identity Propagation in an API Gateway Architecture” by Robert Broeckelmann:

https://medium.com/@robert.broeckelmann/identity-propagation-in-an-api-gateway-architecture-c0f9bbe9273b

How Mature is Your DevSecOps?

Our comprehensive DevSecOps Maturity Assessment covers 8 key phases of DevSecOps practices, 29 questions in total.

By evaluating your team on each capability, you can determine if your DevSecOps maturity level is early, intermediate, or advanced. Your assessment includes a custom report that provides your overall maturity as well as detailed recommendations you can take to enhance your security posture.